When it comes to WebRTC testing, there aren’t many feature-rich tools on the market. Loadero is one of those, testRTC is another widely known option, so we get questions about how those two compare frequently. To give a detailed answer to that question, we published this blog post in which we attempted to make a comprehensive comparison of the two tools. Both Loadero and testRTC services are good options to measure WebRTC performance of different solutions that use the technology, both services provide vast possibilities for configuring tests to simulate certain test scenarios for your WebRTC app. They both return WebRTC statistics as an output. Still, there are some features and differences to compare in order to make the right decision about which of the tools fulfills your WebRTC testing needs. We’ll start with short overviews.

Overview of testRTC

testRTC provides the possibility to run tests from 10 different locations using the Google Chrome browser for simulating real users. It supports reusable test scripts, which are web UI automation scripts written using JavaScript language. It’s important for WebRTC testing that it also allows configuring network conditions and media settings to return WebRTC statistics as test run results.

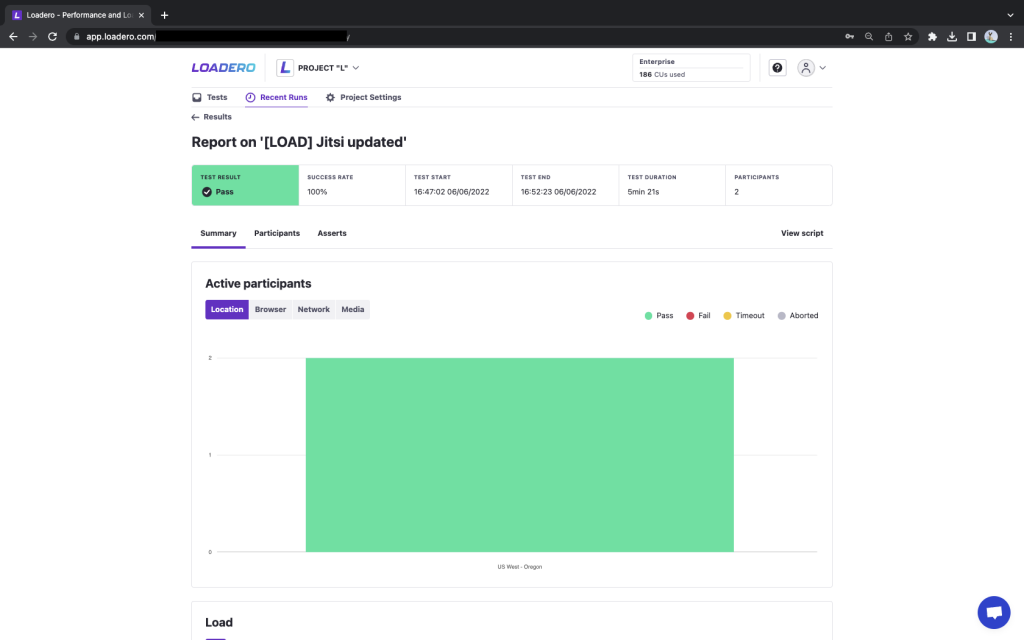

Overview of Loadero

Loadero also allows the launch of tests on Google Chrome and Mozilla Firefox browsers to simulate real users during test runs. Its web UI automation scripts can be written using 3 languages: JavaScript, Java, and Python. Loadero test scripts can be executable from 12 locations around the globe, network connection parameters and media settings can be configured for all the test participants. Loadero also returns WebRTC statistics as test run results.

Comparing the tools

Loadero and testRTC require users to create and configure tests, which includes writing a test script to be executed during test runs. If a user doesn’t have the necessary skills, both services offer test creation services done by their teams.

A test consists of a test script and the configuration of the virtual users. Virtual users, which are actually cloud-hosted automated browsers, are referred to as “participants” by Loadero, whereas testRTC chooses to refer to theirs as “probes”.

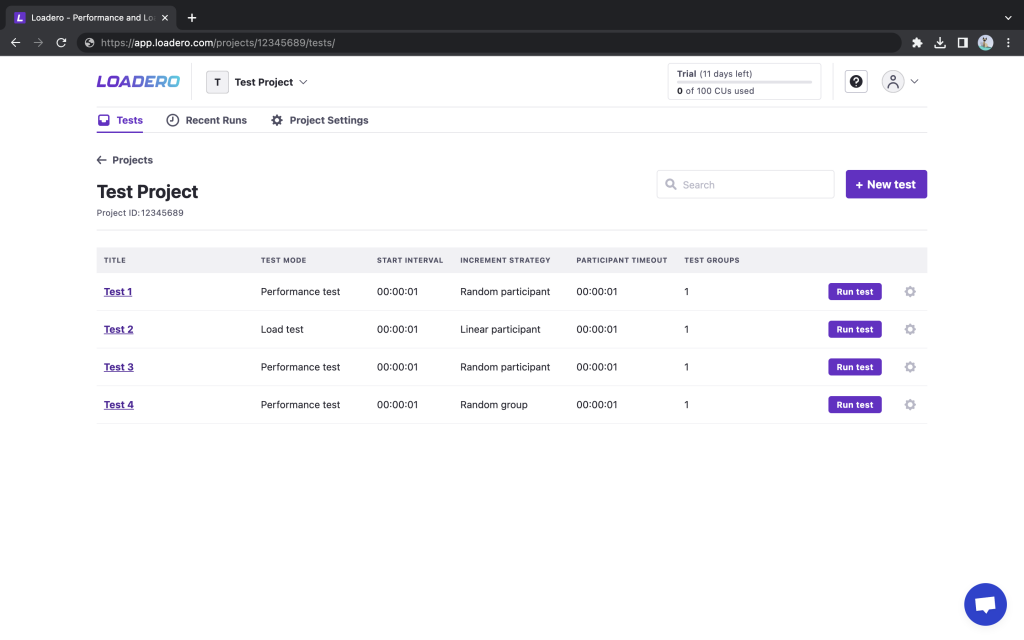

Test configuration

Usually creation of a new test begins with its configuration – setting test-wide parameters that define the behavior of the test and all its participants. Despite the fact that Loadero and testRTC are both using cloud-hosted browsers and web UI automation, test settings between Loadero and testRTC differ quite significantly.

Test settings in Loadero

Besides the Title, users in Loadero are required to configure the following test settings:

- Test mode

There are 3 test modes in Loadero:- Performance test is suitable for running small-scale tests (50 concurrent participants or less) but besides WebRTC stats this mode provides other handy data, such as client-side machine resources usage stats.

- Load test is suitable for testing scalability by running thousands of concurrent participants.

- Session record test, which provides a recording of what happened on each participant’s screen during the test script execution

- Start interval

The parameter also often called ramp-up time in other services describes the total amount of time in which all participants start the test run. - Increment strategy

Specifies participant distribution over start interval when beginning test execution. The parameter gives control over test participants accessing the tested solution to prevent hitting a DDoS protection, make sure some user flows are started earlier than others, or simulate real-life users that join with random delays. - Participant timeout

Specifies the time limit for the test script execution by each individual participant. This is necessary to prevent too-long test execution that may happen in cases when defined commands may freeze or require longer execution times.

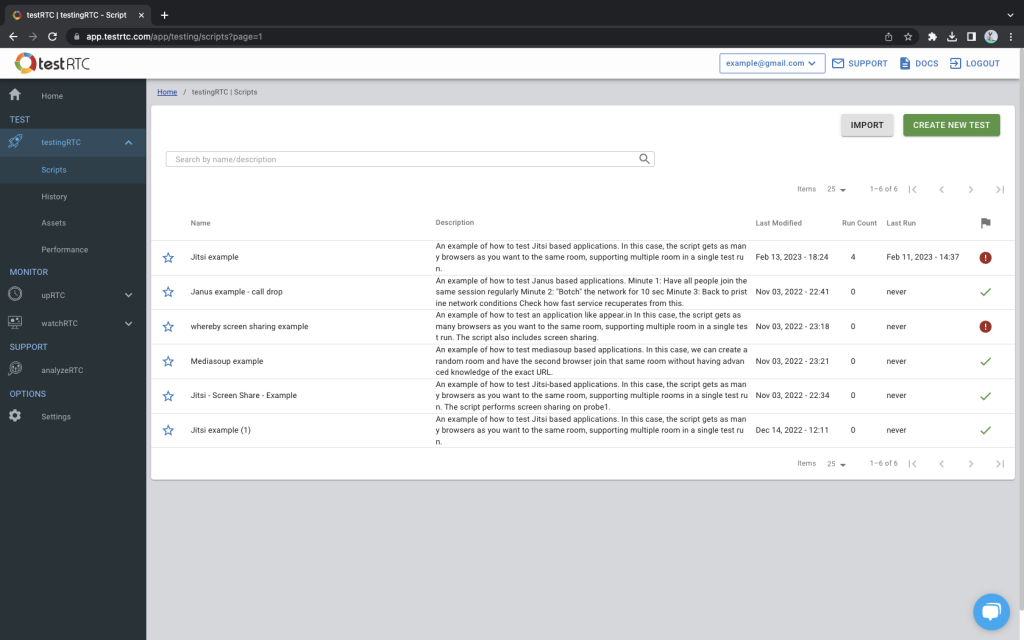

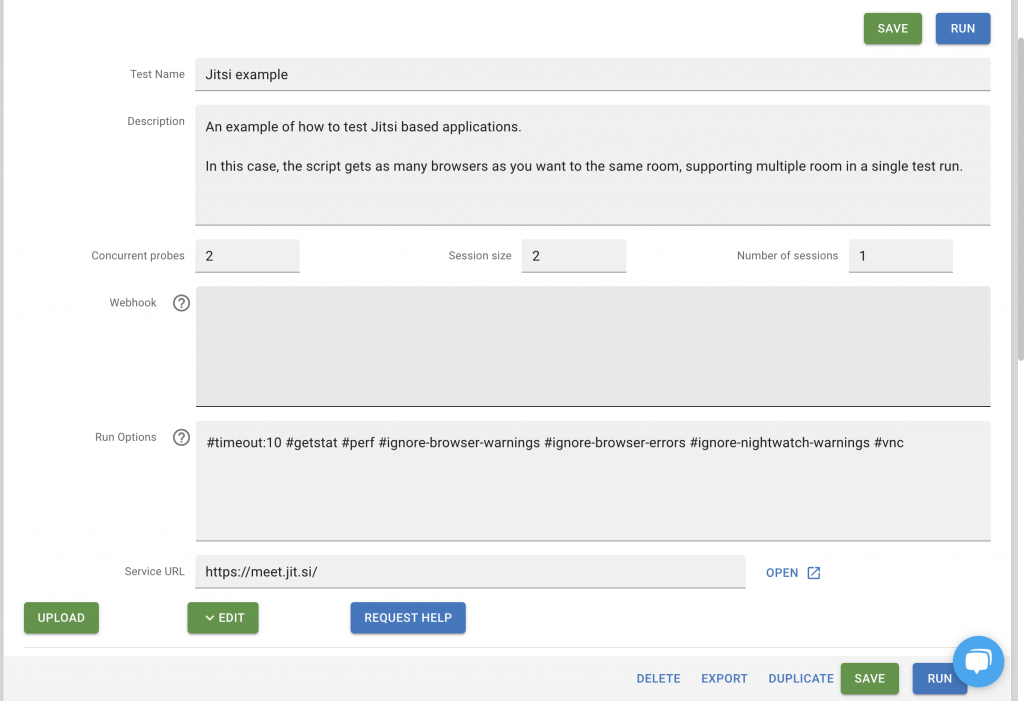

Test settings in testRTC

Users in testRTC are required to configure the following test settings:

- Concurrent probes

The amount of concurrent probes defines how many probes (simulated users) will execute the test simultaneously. - Session size

Session size defines the size of a particular test session, which is run in parallel with other test sessions (if multiple are created). For example, if the number of concurrent probes is set to 2, and the session size is set to 1, then 2 parallel test sessions will be created, each with one probe. Each probe will have 2 parameters – a unique ID inside the session and a session ID.

Apart from required fields, the user may configure optional fields as well:

- Webhook

A webhook that will be called at the end of a test run execution. - Run options

Run options define other advanced test settings, like timeout or turning off ignoring of browser warnings, or enabling the collection of webrtc-internals. - Service URL

Points to service where test execution is started, allowing tracking probes manually.

Difference between Loadero and testRTC simulated users distribution

| Probe distribution in testRTC: | Participant/group distribution in Loadero |

| testRTC tends to equally distribute probes and probe configurations between test sessions (if multiple are created). For example, if a user has created 2 machine profiles A and B and set concurrent probes to 4 and session size to 2, then 2 sessions are going to be created, each with 2 probes – one with machine profile A, another one with profile B. | Participants and participant groups are distributed according to instructions defined in the test script. It is possible to create multiple different sessions and assign one or multiple specific groups or participants to a given session with the help of Loadero variables, but it is the user’s responsibility to manage groups and participants between sessions. Each participant’s settings are configured separately. |

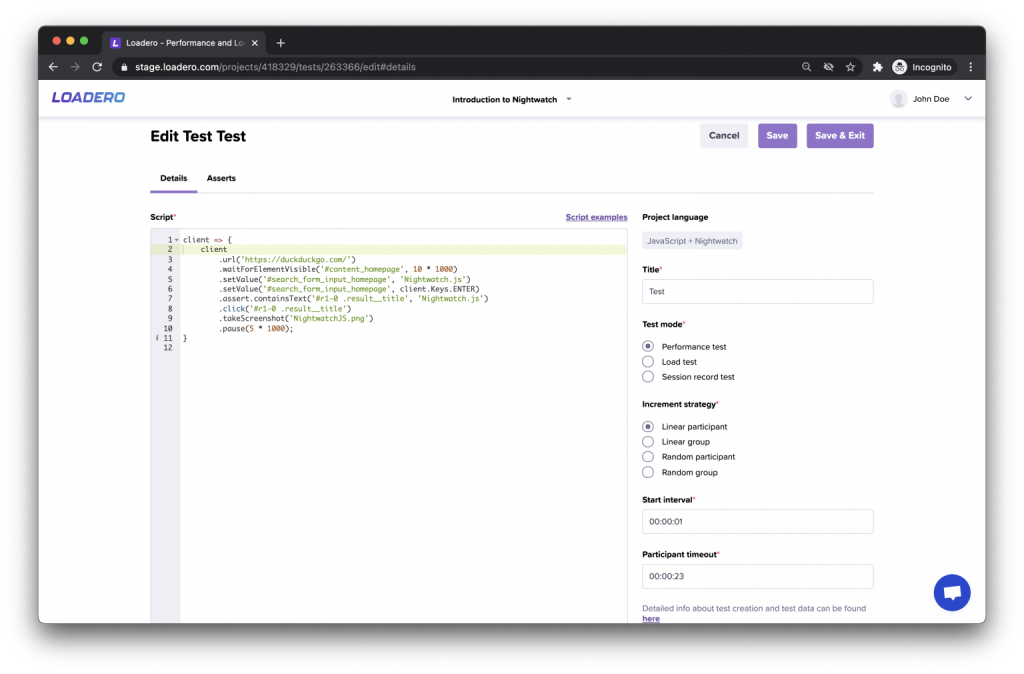

Test script creation

Both Loadero and testRTC require users to write test scripts by themselves using programming languages. Although the general idea of a web UI automation script execution in cloud-hosted browsers is the same for both tools, there are many differences in details.

Comparison of supported languages

Both tools allow users to write test scripts in Javascript with the use of Nightwatch.js framework. It is the only test scripting option in testRTC, while Loadero also supports Java with TestUI framework and Python with Py-TestUI framework.

Comparison of custom commands

To expand the capabilities in creating test scenarios, both Loadero and testRTC have developed several custom commands – mostly these commands allow to change network settings, take screenshots, ignore browser alerts and other commands to simulate different real-life actions and conditions for WebRTC testing.

| Description | testRTC | Loadero (JS + Nightwatch) |

| Update network settings | .rtcSetNetwork.rtcSetNetworkProfile() | .updateNetwork() |

| Take a screenshot | .rtcScreenshot() | .takeScreenshot() |

| Upload/Set a file | – | .setFile() |

| Wait for the download to finish | – | .waitForDownloadFinished() |

| Set a request header | – | .setRequestHeader() |

| Set User-agent | – | .setUserAgent() |

| Ignore a browser alert | – | .ignoreAlert() |

| Measure a step’s completion time | .rtcStartTimer() | .timeExecution() |

| perform() with timeout | – | .performTimed() |

| Synchronization | .rtcSetSessionValue().rtcWaitForSessionValue().rtcSetTestValue().rtcWaitForTestValue() | – |

| Debug commands | .rtcInfo() | – |

| Assertion and expectation commands | .rtcSetTestExpectation() .rtcSetEventsExpectation() .rtcSetCustomExpectation().rtcSetCustomEventsExpectation() | – |

Important: Because the tools are different in many ways, the lack of some custom commands does not necessarily mean that such a feature is not present. For example, Loadero does not have custom commands for “Assertion and expectation”, but it allows setting assertions as performance metric expectations in the test configuration without scripting.

Simulated users configuration in Loadero and testRTC

Simulated users in Loadero are called “Participants”, but in testRTC – “probes”, the configuration of which are referred to as “participant settings“ and “machine profiles” accordingly.

Configuration settings

| Setting | testRTC | Loadero |

| Browser Ability to change the test agent’s browser | ✅ | ✅ |

| Location Ability to change the test agent’s location | ✅ | ✅ |

| Media Ability to set test agent’s media to simulate web camera and microphone | ✅ | ✅ |

| Network Ability to change test agent’s network conditions | ✅ | ✅ |

| Count Ability to set how many test agents will have given test agent configuration | ✅ | |

| Firewall Ability to set Firewall settings | ✅ | |

| Compute Units Ability to set how powerful the simulated user’s machine will be | ✅ | |

| Group Ability to set group simulated users into clusters | ✅ |

Now let’s take a closer look at the parameters and how exactly their available options differ in both tools.

Browsers

| testRTC | Loadero |

| Google Chrome Stable (v107) Google Chrome Unstable (v109) Google Chrome Beta (108) Google Chrome Prev Stable(106) | Google Chrome 106 Google Chrome 107 Google Chrome 108 Google Chrome 109 Google Chrome 110 Google Chrome 110.0.5481.77 Mozilla Firefox 106 Mozilla Firefox 107 Mozilla Firefox 108 Mozilla Firefox 109 Mozilla Firefox 110 Mozilla Firefox 110.0 |

Note: as of the time of publication of this blog; the newest version of Google Chrome is v 110.0.5481.100, Mozilla Firefox is v110.0.

Locations

Simulation of users in different locations is an important part of testing WebRTC applications. Users can be spread around the globe and the latency can affect the quality of audio and video the users get in an application.

| testRTC | Loadero |

| East US West US Europe London Asia Japan India Australia Brazil Canada | US East – Oregon US East – North Virginia US West – Oregon EU Cental – Frankfurt EU West – Ireland EU West – Paris AP East – Hong Kong AP Northeast – Tokyo AP Northeast – Seoul AP South – Mumbai AP Southeast – Sydney SA East – Sao Paulo |

Media configuration

Media configuration allows users to choose what type of media will be used in the browsers to simulate the usage of webcams and microphones by simulated users. testRTC provides 11 predefined media presets, whilst in Loadero users are able to configure media and audio separately, resulting in 264 possible media configurations.

Note: Loadero video and audio bitrate values are uncompressed.

| testRTC | Loadero – Video | Loadero – Audio |

| VOA-VGA Video:640×480, 2948 Kbps Audio:121 Kbps KrankyGeek-1080p Video:1920×1080, 8069 Kbps Audio:173 Kbps Salsa-720p Video:1280×720, 9667 Kbps Audio:192 Kbps Tame_Impala-320x240p Video:320×240, 758 Kbps Audio:128 Kbps VOA-VGA04 Video:640×480, 2948 Kbps Audio:121 Kbps VOA-VGA10 Video:640×480, 2948 Kbps Audio:121 Kbps VOA-audio-only Audio:98 Kbps WebRTC-Tutorial-Audio10 Audio: 128 Kbps WebRTC-Tutorial-Audio20 Audio: 128 Kbps WebRTC-Tutorial-Audio40 Audio: 128 Kbps birds Video: 192×96, 354 Kbps | 1080p video feed 746 497 Kbps 1080p @ 15/30/5 FPS dynamic video 373 248 / 746 497/ 124 416 Kbps 1080p video feed with center marker 746 497 Kbps 1080p video feed with top left side marker 746 497 Kbps 1080p @ 30FPS meeting video 746 497 Kbps 240p video feed 36 807 Kbps 240p @ 15/30/5FPS dynamic video 18 403 / 36 807 / 6 134 Kbps 240p video feed with top left side marker 36 807 Kbps 240p @ 30FPS meeting video 36 807 Kbps 360p video feed 82 945 Kbps 360p @ 15/30/5FPS dynamic video 41 472 / 82 945 / 13 824 Kbps 360p video feed with top left side marker 82 945 Kbps 360p @ 30FPS meeting video 82 945 Kbps 480p video feed 147 572 Kbps 480p @ 15/30/15FPS dynamic video 73 786 / 147 572 / 24 595 Kbps 480p video feed with top left side marker 147 572 Kbps 480p @ 30FPS meeting video 147 572 Kbps 720p video feed 331 777 Kbps 720p @ 15/30/5FPS dynamic video 165 888 / 331 777 / 55 296 Kbps 720p video feed with marker 331 777 Kbps 720p video feed with top left side marker 331 777 Kbps 720p @ 30FPS meeting video 331 777 Kbps Default video feed (browser built-in video) | DTMF tone audio feed 44,1 kHz 2 channels 1 411 Kbps 128kbps audio feed 44,1 kHz 2 channels 1 411 Kbps -20dB audio feed 44,1 kHz 2 channels 1 411 Kbps -30dB audio feed 44,1 kHz 2 channels 1 411 Kbps -50dB audio feed 44,1 kHz 2 channels 1 411 Kbps Silent audio feed 44,1 kHz 2 channels 1 411 Kbps ViSQOL speech audio feed 44,1 kHz 2 channels 1 411 Kbps Default audio feed (browser built-in audio) |

Network settings

Both tools have features to introduce some network limitations for the simulated users. This is a very important feature for WebRTC testing, since it allows checking the quality of audio and video users with network connection imperfections will have.

Important: Network for Loadero is given in incoming/outgoing format. If no value is given, then the network is not limited.

| testRTC | Loadero |

| No throttling | Default |

| Regular 4G Bandwidth: 4 Mbps Latency: 10ms Packet loss: 0.1% | 4G network Bandwidth: 100mbps / 50mbps Latency: 45ms Packet loss: 0.2% Jitter: 5ms/ 15ms |

| Regular 3G Bandwidth: 1200 Kbps Latency: 20 ms Packet loss: 0.2% | 3G network Bandwidth: 1200kbps Latency: 250ms Packet loss: 0.5% Jitter: 50ms |

| – | 3.5G/HSDPA Bandwidth: 20 mbps / 10 mbps Packet loss: 0.5% Latency: 150ms Jitter: 10ms |

| DSL Bandwidth: 10 Mbps Latency: 10ms Packet loss: 0.1% | – |

| – | Asymmetric Bandwidth: 500kbps / 1000kbps Latency: 50ms JItter: 10ms |

| Wifi Bandwidth: 30 Mbps Latency: 30ms Packet loss: 0.1% | – |

| Regular 2G Bandwidth: 250 kbps Latency: 50ms Packet loss: 1% | – |

| – | GPRS Bandwidth: 80 kbps / 20kbps Packet loss: 1% Latency: 650 ms Jitter: 100ms |

| Edge Bandwidth: 500 Kbps Latency: 80ms Packet loss: 1% | Edge Bandwidth: 200 kbps |

| Wifi High packet loss Bandwidth: 30 Mbps Latency: 40ms Packet loss: 5% | – |

| Poor 3G Bandwidth: 1200 kbps Latency: 90ms Packet loss: 5% | Poor 4G Bandwidth: 4000 kbps Latency: 90ms Packet loss: 5% |

| Unstable 4G Bandwidth: 4 Mbps Latency: 500ms Packet loss: 10% | – |

| Very Bad Network Bandwidth: 1 Mbps Latency: 500ms Packet loss: 10% | Satellite phone Bandwidth: 1000 kbps / 256 kbps Latency: 600ms |

| Call Drop Bandwidth: 50 kbps Latency: 500ms Packet loss: 20% | – |

| – | 100% packet loss Packet loss: 100% |

| 50% Packet Loss Bandwidth: 40 Mbps Latency: 10ms Packet loss: 50% | 20% packet loss Packet loss: 20% |

| – | 10% packet loss Packet loss: 10% |

| – | 5% packet loss Packet loss: 5% |

| High Latency 4G Bandwidth: 4 mbps Latency: 600ms Packet loss: 0.2% | – |

| High Packet Loss 4G Bandwidth: 4 mbps Latency: 5ms Packet loss: 20% | – |

| – | High Latency Latency: 500ms Jitter: 50ms |

| – | High jitter Latency: 200ms Jitter: 100ms |

| Custom with use of a custom command | Custom with use of a custom command |

Aside from network configuration, testRTC allows setting one of four firewall values:

- No Firewall

- FW – HTTPS Allowed

- FW – HTTP and HTTPS Allowed

- FW – Baseline

Assertion of performance metrics

Whilst testing there might be a need to verify and assert certain metrics’ values, instead of manually inspecting and analyzing WebRTC statistics. Both Loadero and testRTC deliver assertion functionality to the user, yet do this in different ways.

Assertion in testRTC

Metrics assertion in testRTC is achieved by calling the following methods in the test script:

.rtcSetTestExpectation(String)

.rtcSetEventsExpectation(String)

.rtcSetCustomExpectation(String)

.rtcSetCustomEventsExpectation(String)

where String is a particular metric’s assertion. For media metric assertion the following String format is required:

“media_format.direction.channels.criteria”

media_formathas 2 possible values =audioandvideo;directionshall beinorout;channelslevel is optional and upon calling evaluates the bitrate of each channel separately;- criteria consists of the metric to be compared, the operator, and the expected value, f.e.,

video.in > 0. The following comparison operators are available:

==><>=<=!=

As for metrics, these metrics may be asserted:

- Number of channels

- Emptiness of channel

- Bitrate (max/min postfix available)

- Packets loss (%)

- Packets lost

- Data

- Packets

- Roundtrip

- Jitter

- Codec

- FPS

- Width and/or Height

Failed assertions are shown in the “Overview” section in test results; if an assertion fails, then the test itself does as well.

Apart from media, it is possible to assert probe and performance metrics

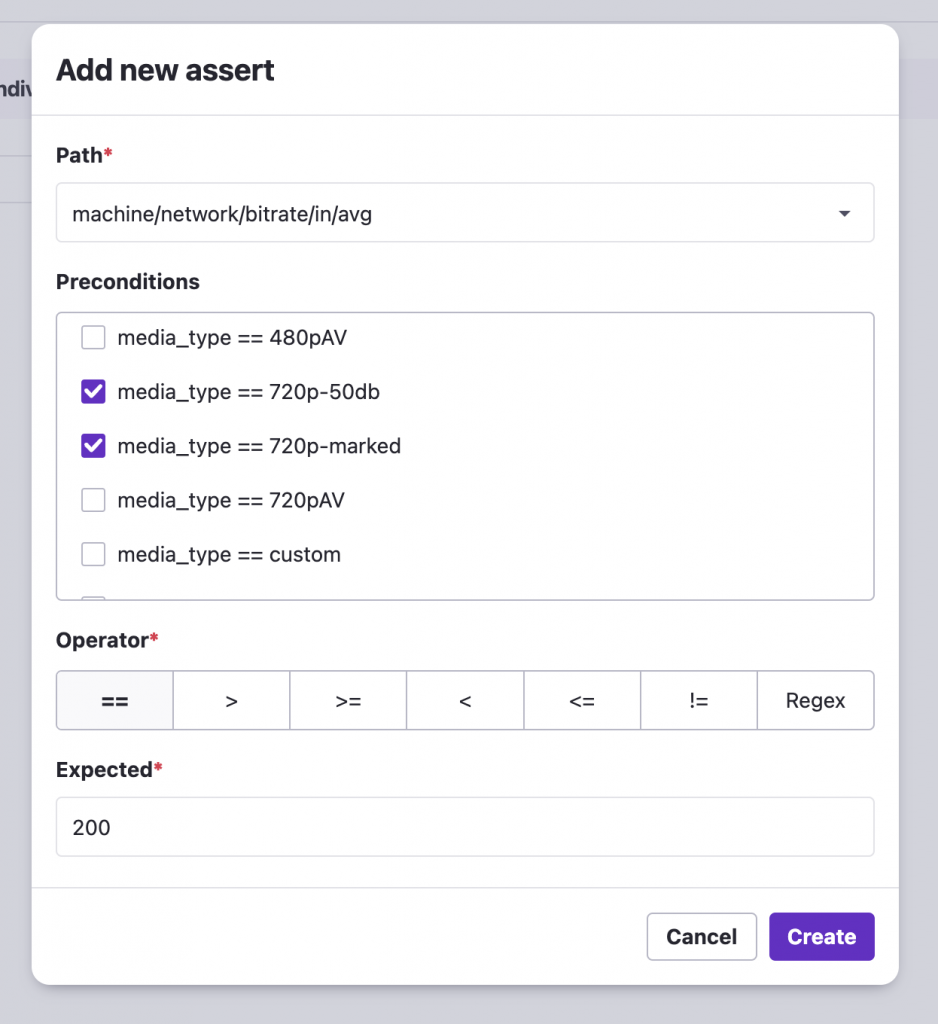

Assertion in Loadero

Metrics assertion in Loadero is achieved by creating one in the “Asserts” tab of the “Test Creation” page. The creation of asserts happens in the following way:

- Select statistic

audioandvideostatistics are available for WebRTC testing; apart from them,CPU,networkandRAMmachine statistics are available. - Select measurement

The user can select the following measurements for a given statistic:

| audio | video |

bitrate | bitrate |

bytes | bytes |

codec | codec |

connections | connections |

jitter | jitter |

jitterBuffer | jitterBuffer |

level | level |

packets | packets |

packetsLost | packetsLost |

RTT | |

FPS | |

frameHeight | |

frameWidth |

- Select direction –

InorOut - Select aggregator:

- 1st

- 25th

- 50th

- 5th

- 75th

- 95th

- 99th

- avg

- max

- rstddev

- stddev

- total

- Select operator:

- Regex

- ==

- >

- <

- >=

- <=

- !=

- Enter Expected value

Apart from asserts, preconditions also can be configured in Loadero. Basically, preconditions specify which test agents’ metrics will be asserted based on that test agent’s configuration.

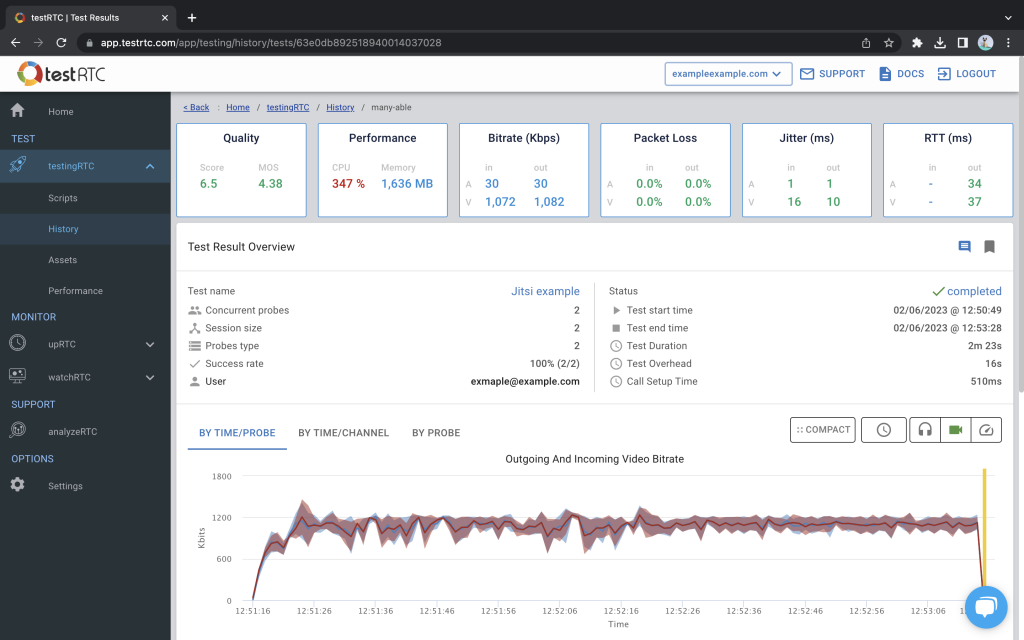

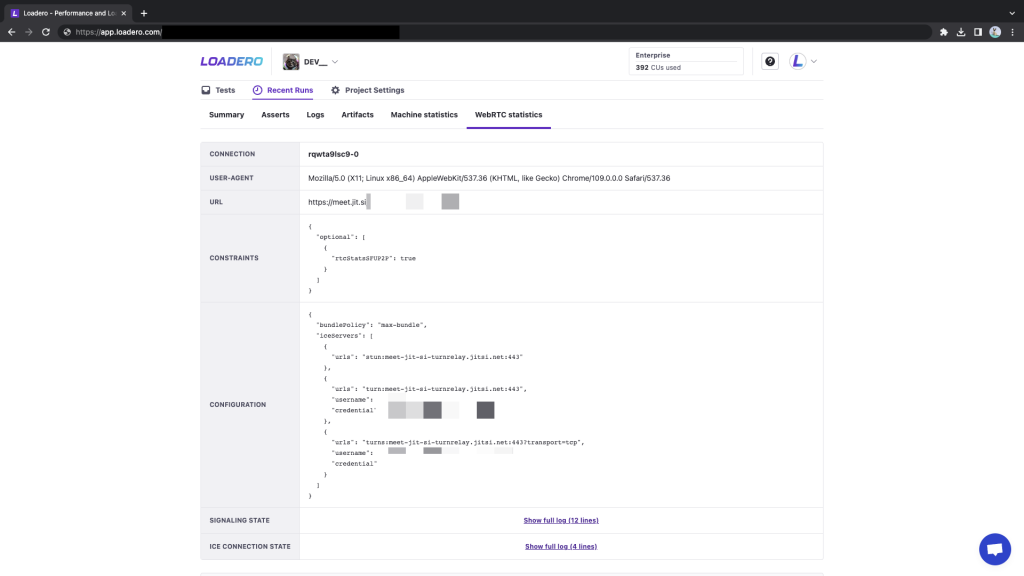

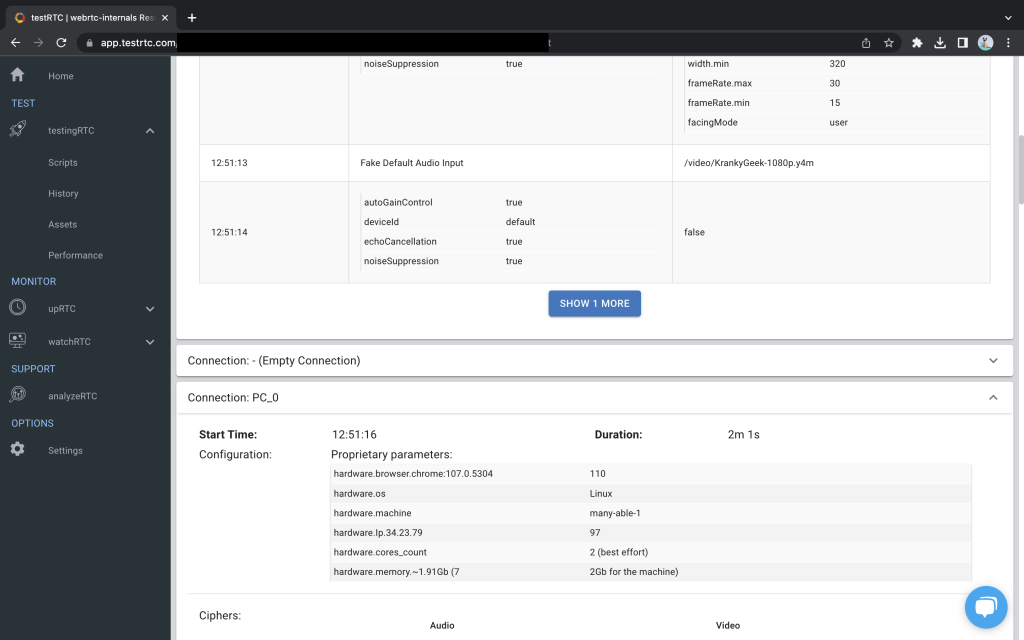

WebRTC statistics in results reports

Both Loadero and testRTC tests return various WebRTC statistics as test output and are able to analyze WebRTC statistics both for any particular simulated user and for the entire test run in total.

| WebRTC statistic | testRTC | Loadero |

| Bitrate In/Out For audio and video | ✅ | ✅ |

| Packets In/Out For audio and video | ✅ | |

| Packets Lost In/Out For audio and video | ✅ | ✅ |

| Jitter In/Out For audio and video | ✅ | ✅ |

| Level In/Out For audio | ✅ | |

| Round Trip Time (RTT) For audio and video | ✅ | ✅ |

| Codec For audio and video | ✅ | ✅ |

| Frame Height/Width For video | ✅ | ✅ |

| Resolution For video | ✅ | ✅ |

| FPS For video | ✅ | ✅ |

| MOS Score For audio | ✅ |

More detailed WebRTC statistics include:

| WebRTC statistic | testRTC | Loadero |

| Signaling State | ✅ | ✅ (logs) |

| Connection name | ✅ | ✅ |

| ICE Connection state (logs) | ✅ | ✅ |

| Configuration | ✅ | ✅ |

| User-Agent | ✅ | |

| URL | ✅ | |

| Remote Inbound/Outbound RTP | ✅ | ✅ |

| Inbound/Outbound RTP | ✅ | ✅ |

| Track (ID) | ✅ | ✅ |

| Candidate pair | ✅ | |

| Media source | ✅ | |

| Ciphers | ✅ | |

| WebRTC internal dump (downloadable) | ✅ | ✅ |

In conclusion, both Loadero and testRTC are robust options for WebRTC testing. While they share similar features and functionality, one tool can be superior in some cases, but the other one in different cases. There is no and may not be ‘absolute winner’ – it depends on the user’s perception of the value that a certain tool brings for the user’s specific needs.

It is up to you to carefully consider the differences mentioned here and determine which tool is the best fit for your testing needs and what value it will bring to your development process.

We hope that this blog helped you out in selecting the appropriate tool but we do highly recommend requesting a demo for both tools to make an informed decision by comparing both tools in action.